Nornir using

an Ansible Inventory (Part2)

Reference code for this article is located here.

In Part 1 of this series we covered: installing Nornir, using Ansible inventory in Nornir, Nornir tasks and results, and using Jinja2 for configuration rendering.

In this post, we'll start with our rendered Jinja2 configurations and write these configurations to disk. We'll also use Nornir plus NAPALM to retrieve and save the current device configurations. Finally, we will stage a set of configuration changes, obtain a live diff between the current and proposed configurations, and then commit these changes to the devices.

Saving Jinja2 Configurations to Disk

At this point we have successfully generated configurations using Jinja2 templates, now let's go ahead and write those configurations to disk.

Note, we will be using the same 'write_configs' function to handle saving the rendered Jinja2 configurations and also for saving device backups. We'll use a simple 'backup' flag to differentiate between a newly rendered configuration and a backup:

def write_configs(task, backup=False):

filename = task.host["dev_hostname"]

if backup is False:

# Create a "./configs" directory

pathlib.Path("configs").mkdir(exist_ok=True)

task.run(

task=write_file,

filename=f"configs/{filename}",

content=task.host["config"],

)

else:

# Create a "./backup" directory

pathlib.Path("backup").mkdir(exist_ok=True)

task.run(

task=write_file,

filename=f"backup/{filename}",

content=task.host["backup_config"],

)In this task, we can write either the newly rendered configuration or the backup configuration to disk (using the built-in Nornir "write_file" task). In Part1 of this series, we stored the value of the rendered configuration in the "task.host['config']" variable. Similarly, we will store the backup configuration in "task.host['backup_config']".

We can now run these tasks in the main section of our code to validate that our device configurations do indeed get written to disk.

if __name__ == "__main__":

render_task = nr.run(task=render_configs)

write_task = nr.run(task=write_configs)

$ ls -ltr configs/

total 20

-rw-rw-r-- 1 kbyers kbyers 16098 Apr 30 19:34 sea-nxos-1

-rw-rw-r-- 1 kbyers kbyers 997 Apr 30 19:34 sea-eos-1Sure enough, our files do get created.

Backup the Running Configurations

Before we start actually making changes to devices, let's ensure we can use Nornir to backup the existing device configurations.

The process for this is similar to what we've already done: create a task function that accepts the task object as an argument, add some logic to ensure we are handling the particular type of device correctly, and then run the task against the device.

Now, in the process of working through this, I ran into a couple of issues. First, for NX-OS, we need to retrieve a checkpoint file instead of simply using the get_config() method. Second, for EOS, we can use the get_config() method, but we will need to ensure that "eos_autoComplete" is set to True for NAPALM (this pertains to issues deploying the configuration which we will expand upon later in this article).

NXOS

Let's create a new "backup_configs" task. In this task, we'll first address retrieving the NX-OS checkpoint file. Doing this also let's us explore Nornir's connection handling:

def backup_configs(task):

if task.host.napalm_network_os == "nxos":

task.host.open_connection("napalm", None)

r=task.host.connections["napalm"].connection._get_checkpoint_file()

task.host["backup_config"] = rIn the above code, we check whether the current host is of type "nxos" (as described by our variable "napalm_network_os"). If so, then we create a NAPALM connection to that device.

The "open_connection" method in Nornir allows us to create a connection of our choice using the Nornir connection plugins (in this case a NAPALM connection).

We can then use that NAPALM connection to execute the method that we choose. In this case, we execute the NAPALM "_get_checkpoint_file" method. Using this method, we retrieve a checkpoint from the remote device and save it into the "backup_config" key.

We can then print out the results to see what happens:

if __name__ == "__main__":

render_task = nr.run(task=render_configs)

write_task = nr.run(task=write_configs)

backup_task = nr.run(task=backup_configs)

print_result(backup_task)Uh-oh, it looks like we've hit a snag (the below message was embedded inside a much larger exception string):

ModuleImportError('Please provide a valid driver name.') napalm.base.exceptions.ModuleImportError:

Please provide a valid driver name.In my Packet Pushers VDC repository, I had an Ansible inventory variable named "napalm_network_os". This was used to to tell Ansible the network device operating system. Nornir has a similar concept except Nornir is looking for an attribute named "platform". Consequently, let's update our inventory to reflect that new platform name.

[SNIP]

[arista-eos:vars]

platform=eos

[SNIP]

[cisco-nxos:vars]

platform=nxosWe can now update our "backup_config" task to reflect the "platform" attribute as opposed to "napalm_network_os":

def backup_configs(task):

if task.host.platform == "nxos":

task.host.open_connection("napalm", None)

r=task.host.connections["napalm"].connection._get_checkpoint_file()

task.host["backup_config"] = rRunning our code again exposes another little issue to fix:

[SNIP]

requests.exceptions.SSLError: HTTPSConnectionPool(host='10.0.1.102', port=22): Max retries exceeded with url: /ins (Caused by SSLError(SSLError(1, '[SSL: WRONG_VERSION_NUMBER] wrong version number (_ssl.c:847)'),))

^^^^ END backup_configs ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^The "nxos" platform type in NAPALM uses nxapi_plumbing which is sending and receiving using HTTPS, but we are trying to connect on TCP port 22!

Where is this port 22 coming from? Looking into the Nornir source code for the Ansible inventory plugin, we can see that "ansible_port" is being mapped to "port" in Nornir, which is clearly getting passed down to NAPALM. You can see this here. So, we can either:

- Update our "ansible_port" to reflect the API port (port 444 in my case), or...

- Remove that and simply add a port variable in our inventory..

I'll opt for the latter as that feels a bit simpler to me:

[SNIP]

[arista-eos:vars]

port=444

[SNIP]

[cisco-nxos:vars]

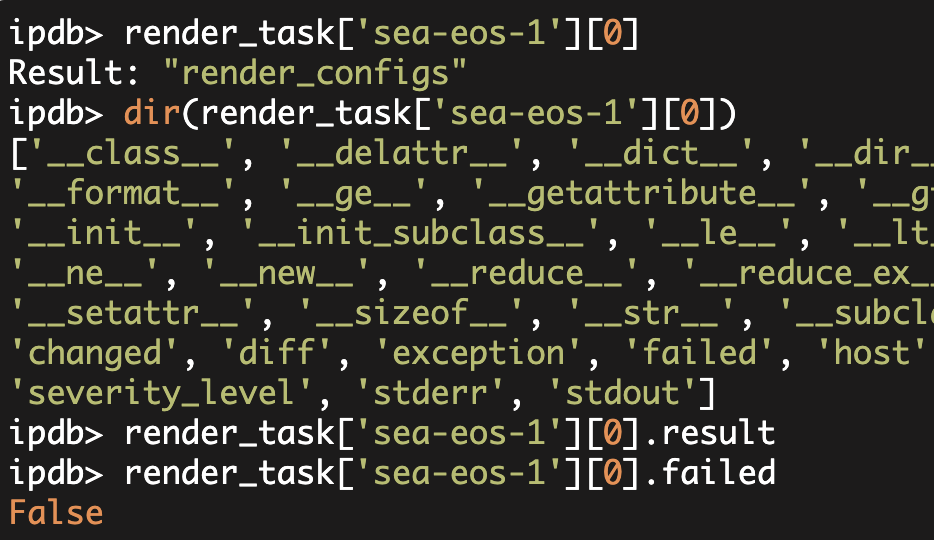

port=444Using Pdb we can inspect what is being returned to us and verify that our "backup_config" data is indeed getting updated:

[SNIP]

--Return--

None

> /home/vagrant/packet_pushers_vdc/deploy.py(69)()

68 import ipdb

---> 69 ipdb.set_trace()

70

ipdb> nr.inventory.hosts['sea-nxos-1']['backup_config']

'\n!Command: Checkpoint cmd vdc 1\n!Time: Wed Feb 27 20:48:57 2019\n\nversion 7.0(3)I7(5) Bios:version \nhostname nxos-base\nclass-map type network-qos c-nq1\n description Default class on qos-group 1\nmatch qos-group 1\

[SNIP]

!#logging console\n\n\n'

ipdb>Perfect, we can now move on to our EOS backup.

EOS

Snagging a viable backup configuration for the EOS platform is a bit simpler as we can use NAPALM's get_config() method to retrieve the running configuration:

from nornir.plugins.tasks import networking

[SNIP]

elif task.host.platform == "eos":

r = task.run(

task=networking.napalm_get,

name="Backup Device Configuration",

getters=["config"],

)

task.host["backup_config"] = r.result["config"]["running"]

ipdb> nr.inventory.hosts['sea-eos-1']['backup_config']

'! Command: show running-config\n! device: eos-base (vEOS, EOS-4.20.1F)\n!\n! boot system flash:/vEOS-lab.swi\n!\ntransceiver qsfp default-mode 4x10G\n!\nhostname eos-base\n

[SNIP]

!\ninterface Management1\n ip address dhcp\n!\nno ip routing\n!\nmanagement api http-commands\n protocol https port 444\n no shutdown\n!\nend\n'

ipdb>Very easy!

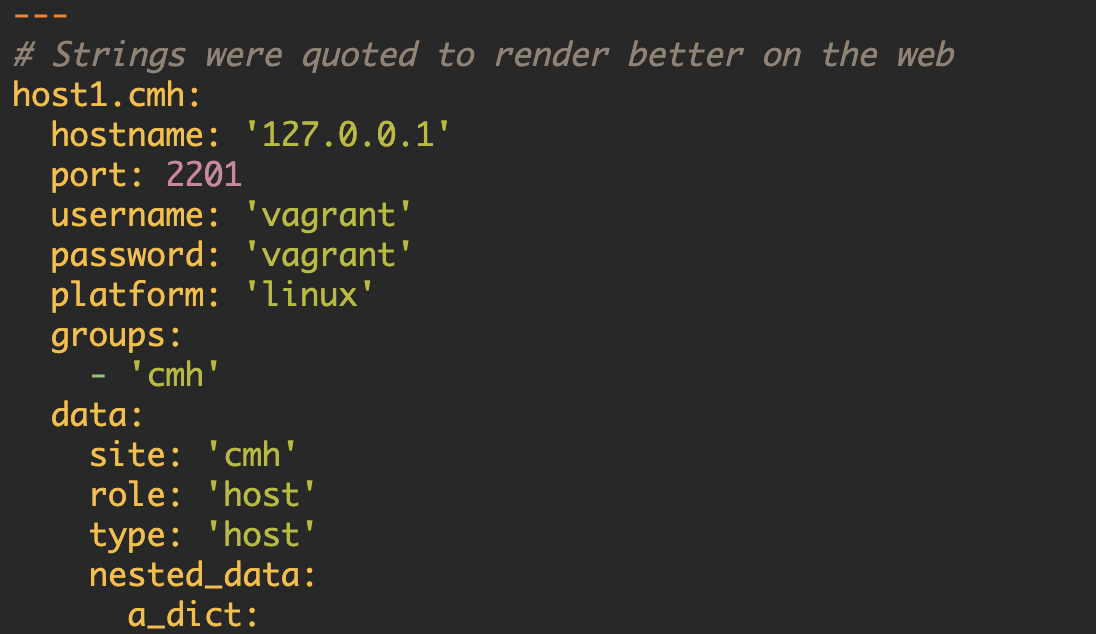

At this point, we won't see the "autoComplete issue" that caused us problems (in certain contexts) with deploying configurations to EOS, but we can go ahead and fix it here anyways. In order to do this we create a group_vars file for our "arista_eos" group with the following content:

---

connection_options:

napalm:

extras:

optional_args:

eos_autoComplete: TrueAdding the above snippet will, for the "arista_eos" group, enable the EOS auto complete feature. For more details on the nature of this issue, see [this GitHub pull request].

Writing Backup Configurations to Disk

With the backup and checkpoint now taken care of, we can now move on to writing these backup configurations to disk. Since we've already written our generated configurations to disk, and already planned for writing our backups to disk this is a straightforward task. We can call the same function "write_configs" that we used earlier. This time passing in additional "backup=True" argument.

Our main should now look similar to this:

if __name__ == "__main__":

render_task = nr.run(task=render_configs)

write_task = nr.run(task=write_configs)

backup_task = nr.run(task=backup_configs)

write_task = nr.run(task=write_configs, backup=True)Sure enough, our configurations are backed up nicely:

$ ls backup/

sea-eos-1 sea-nxos-1

$ head -5 backup/sea-eos-1

! Command: show running-config

! device: eos-base (vEOS, EOS-4.20.1F)

!

! boot system flash:/vEOS-lab.swi

!

$ head -5 backup/sea-nxos-1

!Command: Checkpoint cmd vdc 1

!Time: Tue Mar 26 16:19:02 2019

version 7.0(3)I7(5) Bios:versionStaging a Configuration Change and Obtaining a Diff

In general, it is very valuable to see your pending configuration changes. This is a feature built into the `napalm_install_config` method and this feature is available for us to use in Nornir.

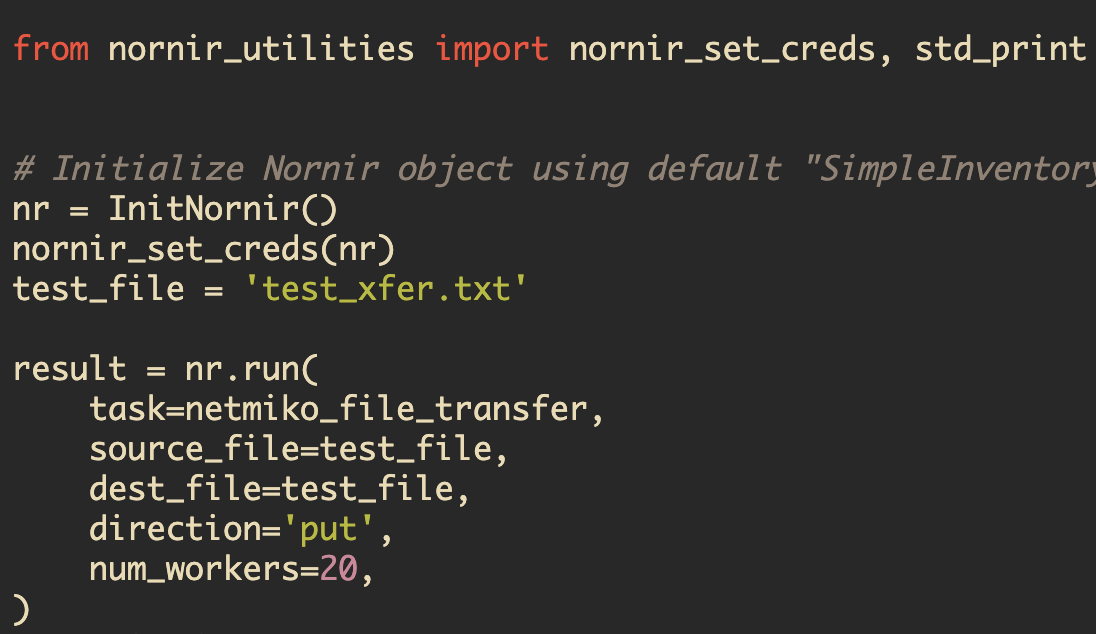

To get rolling, we can import the networking plugins from Nornir into our code:

from nornir.plugins.tasks import networkingMuch like before we can create a new function to group our subtask(s) together:

def deploy_configs(task):

config = task.host["config"]

task.run(

task=networking.napalm_configure,

name="Deploy Configuration",

configuration=config,

replace=True

)The above function should work nicely, but if we want to be able to obtain a "diff" without actually committing the changes, then we will need to modify this slightly.

Nornir already has the concept of a "dry_run". Consequently, we can add an argument to the task and specify whether we only want the "diff" or whether we want to commit the changes to the device.

def deploy_configs(task, dry_run=False):

config = task.host["config"]

task.run(

task=networking.napalm_configure,

name="Deploy Configuration",

configuration=config,

replace=True,

dry_run=dry_run

)If dry_run is True, then we will not commit the changes and only get the diff. If dry_run is False, then we will commit the changes to the remote device.

Once again we can add a line to our main code to execute this function. Additional, we will call the "print_result" Nornir function to see the output of the "deploy_task":

if __name__ == "__main__":

render_task = nr.run(task=render_configs)

write_task = nr.run(task=write_configs)

backup_task = nr.run(task=backup_configs)

write_task = nr.run(task=write_configs, backup=True)

deploy_task = nr.run(task=deploy_configs, dry_run=True)

print_result(deploy_task) [Execution Output]

deploy_configs**********************************************

* sea-eos-1 ** changed : True ******************************

vvvv deploy_configs ** changed : False vvvvvvvvvvvvvvvvvvvvv INFO

---- Deploy Configuration ** changed : True ---------------- INFO

@@ -4,7 +4,12 @@

!

transceiver qsfp default-mode 4x10G

!

-hostname eos-base

+hostname sea-eos-1

+ip name-server vrf default 8.8.4.4

[SNIP]

!

management api http-commands

protocol https port 444

^^^^ END deploy_configs ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

* sea-nxos-1 ** changed : True *****************************

vvvv deploy_configs ** changed : False vvvvvvvvvvvvvvvvvvvvv INFO

---- Deploy Configuration ** changed : True ---------------- INFO

no boot nxos bootflash:/nxos.7.0.3.I7.5.bin

no ip name-server 171.70.168.183 100.74.0.32 10.0.2.3 use-vrf management

hostname sea-nxos-1

[SNIP]

neighbor 10.255.255.1

remote-as 65535

update-source loopback0

address-family ipv4 unicast

^^^^ END deploy_configs ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^From this output, we can see that the devices *would* be changed if we set the dry_run argument to False.

If we want to save this "diff" output to a file we can expand our "deploy_configs" function slightly:

def deploy_configs(task, dry_run=False, diff=False):

[SNIP]

deploy_task = task.run(

task=networking.napalm_configure,

name="Deploy Configuration",

configuration=config,

replace=True,

dry_run=dry_run

)

task.host["diff"] = deploy_task.diff

if diff:

pathlib.Path("diffs").mkdir(exist_ok=True)

task.run(

task=write_file,

filename=f"diffs/{filename}",

content=task.host["diff"],

)With the "diffs" created, we can now move on to actually deploying the configurations!

Deploy Configurations

Since we've done a large amount of preparation work, the actual configuration deployment should be a breeze!

We can simply add another call to our "deploy_configs" function. This time passing the appropriate arguments (i.e. dry_run=False). Additionally, we will want to add a "print_result" call to make sure things are working as desired:

if __name__ == "__main__":

render_task = nr.run(task=render_configs)

write_task = nr.run(task=write_configs)

backup_task = nr.run(task=backup_configs)

write_task = nr.run(task=write_configs, backup=True)

deploy_task = nr.run(task=deploy_configs, dry_run=True, diff=True)

deploy_task = nr.run(task=deploy_configs, dry_run=False, diff=False)

print_result(deploy_task)At this point we have done the following:

- Consumed an existing Ansible inventory

- Generated configurations for our devices

- Backed up our existing configurations

- Saved the "diffs" between our new rendered configurations and our current configurations

- And pushed our configuration out to our devices

all using Nornir!