Nornir using an

Ansible Inventory (Part1)

This is part one of the "Nornir using an Ansible Inventory" series, you can find part two of this series here.

Introduction

In this series of articles we are going to generate configurations from template files using Nornir, but using an Ansible-style inventory.

Backstory: Moving from Ansible to Nornir

Several months ago I presented at the Packet Pushers Virtual Design Clinic (VDC) 3, my session was titled, "CI/CD for Networking".

In that presentation, I demonstrated leveraging Jenkins and Ansible to provide a repeatable and automatable method for managing network device configurations.I selected Ansible, more specifically NAPALM-Ansible, as the network automation tool of choice for a few reasons:

- NAPALM-Ansible is awesome. My demo leverages NAPALM to provide a consistent interface for replacing entire device configurations across Cisco and Arista devices. I prefer this to the Ansible-core *_config modules as it is a more *declarative* approach which will work better in the long run.

- Ansible, while it has its issues (see below), is widely understood and supported. I tend to view Ansible as the easiest (best?) entry point into network automation (though not the best in the long term one IMO!).

- Ansible provides reasonably nice output and handles concurrency out of the box so we don't have to figure that out for ourselves!

- Ansible inventory and variable management is very simple to understand and is flexible and useful.

I'm certain there are tons of other great reasons to use Ansible (and this is 100% not an anti-Ansible post), however, there are some clear challenges with Ansible. Dimitry Figol has stated on multiple occasions his objections to Ansible so instead of reinventing the wheel I'll paraphrase him here:

- Slow

- Debugging and troubleshooting issues is painful

- Programming flow-control statements in YAML (are bad)

- Programming flow-control statements in YAML (are bad)

- Integration with other (Python) code is painful

And one more very important point from David Barroso (once again paraphrasing):

- You are learning a one-off DSL instead of gaining experience with Python (i.e. a general purpose language)

Ansible has its advantages, but it also has several challenges...but, what other options do we have? What other frameworks are available for network automation?

Plenty, I'm sure!

In this article, though, I want to talk about Nornir.

Nornir is a pure Python automation framework created by David Barroso. As a way to get introduced to Nornir, this post will talk through converting my original Packet Pushers VDC demo from using Ansible to using Nornir. This should give us a practical look at Nornir.

Before going any further, it may be worth taking a peak of the demo of the VDC presentation that I recorded later (after getting over my monster head cold) [here], finally, you can see the GitHub repository for the demo [here].

Without any further preamble, let's get into converting the Ansible playbooks into Nornir!

Setup

This post will pick up right where my Packet Pushers VDC repository and demo leaves off: feel free to use the Vagrant environment documented in the original repository and simply clone the [VDC repo] into the Jenkins box (we'll ignore the Jenkins bits for this post, but we can still use that machine as our development environment).

This isn't a step-by-step guide, but hopefully will help you get started with Nornir.

The only modifications to the Jenkins base box is installation of nornir, and ipdb (I'm not bothering with venv as the box gets destroyed/rebuilt all the time):

$ python3 -m pip install nornir ipdb --userMoving from Ansible to Nornir: Inventory and Variables

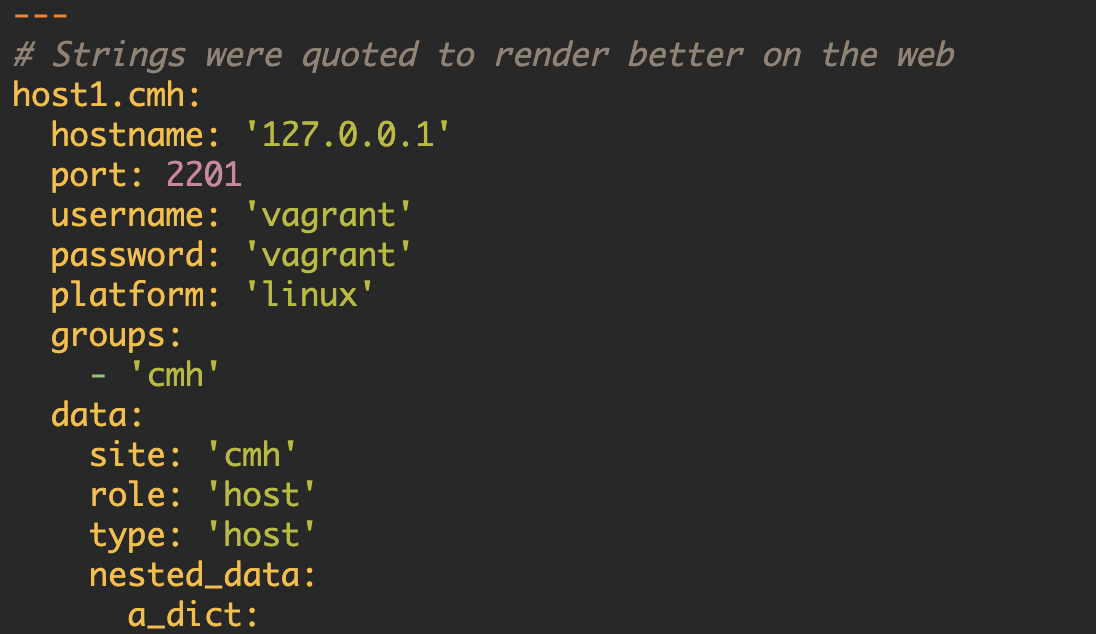

In Ansible, one of the first things we need to take care of is setting up our environment; Nornir is no different. Instead of using an "ansible.cfg" file (as is done in the base repo for the VDC), we simply have a "config.yaml" file. This configuration file is going to tell Nornir some basic settings:

---

inventory:

plugin: nornir.plugins.inventory.ansible.AnsibleInventory

options:

hostsfile: "inventory"As you can see, we have kept this very simple.

We have a single section here for "inventory"; this section does exactly what you would expect it to i.e. tell Nornir which inventory plugin to use, and where to fine any relevant files. In this case, we will be using the "AnsibleInventory" plugin such that Nornir simply parses our existing inventory without modification.

Nornir has several other configuration settings. For a list of options, you can see the configuration documentation [here].

With our base configuration out of the way, we can create a Python script to initialize a nornir object:

import ipdb

from nornir import InitNornir

# Initialize Nornir object from config_file

nr = InitNornir(config_file="config.yaml")

ipdb.set_trace()Running this script will allow us to inspect the nornir object ("nr" in this case).

$ python3 deploy.py

--Return--

None

> /home/vagrant/packet_pushers_vdc/deploy.py(6)()

4 # Initialize Nornir object from config_file

5 nr = InitNornir(config_file="config.yaml")

----> 6 ipdb.set_trace()

ipdb> type(nr)

<class 'nornir.core.Nornir'>

ipdb> dir(nr)

['__class__', '__delattr__', '__dict__', '__dir__', '__doc__', '__enter__', '__eq__', '__exit__', '__format__', '__ge__', '__getattribute__', '__gt__', '__hash__', '__init__', '__init_subclass__', '__le__', '__lt__', '__module__', '__ne__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', '_run_parallel', '_run_serial', 'close_connections', 'config', 'data', 'dict', 'filter', 'get_validators', 'inventory', 'logger', 'run', 'state', 'validate']

ipdb>We can see that we've created a nornir object, and that we have some useful attributes in this class—for now let's focus on the inventory:

ipdb> nr.inventory.groups

{'sea': Group: sea, 'arista-eos': Group: arista-eos, 'cisco-nxos': Group: cisco-nxos}

ipdb> nr.inventory.hosts

{'sea-eos-1': Host: sea-eos-1, 'sea-nxos-1': Host: sea-nxos-1}The Nornir-AnsbileInventory plugin looks to be working as desired--at least for our hosts/groups. Note, the inventory file in the repository is an INI-style file, but there is also a YAML version if you would prefer to test that.

In Ansible, host and group variables are generally part of the inventory system and are accessible in Plays and Tasks: Nornir functions in a similar way. The "data" attribute of any individual host is a dictionary representation of all of the "host_vars":

ipdb> nr.inventory.hosts['sea-eos-1'].data

{'bgp_ipv4_unicast': {'neighbors': {'10.255.255.2': {'remote-as': 65535,

'update-source': 'loopback0'}},

'networks': ['10.255.255.101/32']},

'ethernet_interfaces': {'Ethernet1': {'access': False,

'description': 'sea-eos-1 Mgmt',

'routed': {'ip': '10.0.1.101/24'},

'state': 'no shutdown',

'trunk': False},

'Ethernet2': {'access': False,

'description': 'sea-nxos-1 Eth1/2',

'routed': {'ip': '10.255.254.1/31'},

'state': 'no shutdown',

'trunk': False},

'Ethernet3': {'access': False,

'description': 'sea-nxos-1 Eth1/3',

'routed': {'ip': '10.255.254.3/31'},

'state': 'no shutdown',

'trunk': False}},

'loopback_interfaces': {'Loopback0': {'description': 'sea-eos-1 Loopback',

'routed': {'ip': '10.255.255.1/32'}}},

'ospf_interfaces': {'ospf_Ethernet1': {'area': '0.0.0.0',

'passive': True,

'type': 'point-to-point'},

'ospf_Ethernet2': {'area': '0.0.0.0',

'passive': False,

'type': 'point-to-point'},

'ospf_Ethernet3': {'area': '0.0.0.0',

'passive': False,

'type': 'point-to-point'},

'ospf_Loopback0': {'area': '0.0.0.0', 'passive': True}},

'router_id': '10.255.255.1'}Interestingly this is *just* the host variables, and doesn't include the group variables.

If, however, we inspect the "items" or "values" we can clearly see that we do in fact have access to all of the group-level variables as well ("timezone" is a "sea" group variable in this example):

ipdb> nr.inventory.hosts['sea-eos-1'].items()

dict_items(

[('dev_hostname', 'sea-eos-1'),

('router_id', '10.255.255.1'),

('ethernet_interfaces',

{'Ethernet1': {'access': False,

'description': 'sea-eos-1 Mgmt',

'routed': {'ip': '10.0.1.101/24'},

'state': 'no shutdown',

'trunk': False},

'Ethernet2': {'access': False,

'description': 'sea-nxos-1 Eth1/2',

'routed': {'ip': '10.255.254.1/31'},

'state': 'no shutdown',

'trunk': False},

'Ethernet3': {'access': False,

'description': 'sea-nxos-1 Eth1/3',

'routed': {'ip': '10.255.254.3/31'},

'state': 'no shutdown',

'trunk': False}}),

('loopback_interfaces',

{'Loopback0': {'description': 'sea-eos-1 Loopback',

'routed': {'ip': '10.255.255.1/32'}}}),

('ospf_interfaces',

{'ospf_Ethernet1': {'area': '0.0.0.0',

'passive': True,

'type': 'point-to-point'},

'ospf_Ethernet2': {'area': '0.0.0.0',

'passive': False,

'type': 'point-to-point'},

'ospf_Ethernet3': {'area': '0.0.0.0',

'passive': False,

'type': 'point-to-point'},

'ospf_Loopback0': {'area': '0.0.0.0', 'passive': True}}),

('bgp_ipv4_unicast',

{'neighbors': {'10.255.255.2': {'remote-as': 65535,

'update-source': 'loopback0'}},

'networks': ['10.255.255.101/32']}),

('j2_template_file', 'arista_eos.j2'),

('netmiko_port', 22),

('timezone', 'PST'),

('timezone_offset', 8),

('timezone_summer', 'PDT'),

('domain_name', 'sea.acme.io'),

('name_servers', ['8.8.8.8', '8.8.4.4']),

('ospf_proc_id', 1),

('ospf_hello', 1),

('ospf_dead', 3),

('bgp_asn', 65535),

('bgp_max_paths_ibgp', 2),

('ansible_python_interpreter', '/usr/bin/python3'),

('ssh_key_username', 'vagrant'),

('ssh_key_file', '~/.vagrant.d/insecure_private_key')]

)Finally, as this is "pure" Python, we can access any of these variables as you normally would.

ipdb> nr.inventory.hosts['sea-eos-1']['timezone']

'PST'Now that we have a basic understanding with accessing hosts and their variables, let's try to generate our configurations using the same Jinja2 templates as before, but this time using Nornir.

Rendering Jinja Templates with Nornir

Before jumping into rendering our Jinja2 template, we need to briefly discuss Tasks in nornir.

In nornir, a Task is the code that gets executed for each of your nornir hosts. You execute the Task by using the Nornir ".run()" method. Nornir comes with a set of built-in task-plugins; you can see these plugins [here]. These task-plugins, provide you with built-in tasks that you can call (think functions that you execute per-host using the .run() method). For now, we are concerned with the "template_file" Task. This Task renders a Jinja2 template.

Note, in nornir you can also create subtasks. One reason for creating subtasks is they allow you to access information specific to the host. In this Jinja2 example, we will need to create a subtask (as we have host specific variables that we need to access).

How do we create a Nornir subtask?

In order to create a subtask, we just create a Python function with an argument named "task".

Basically, the subtask will look similar to the following:

def some_task_name(task):

some_codeThen in our main code, we call the .run() method and pass in our subtask as an argument (we will show an example of this shortly). Note, behind the scenes there is some Nornir-magic that causes the subtask to be executed in a separate thread and passes a Nornir Task object in as the first argument (in other words the "task" parameter in our subtask will be bound to a Nornir Task object per-host).

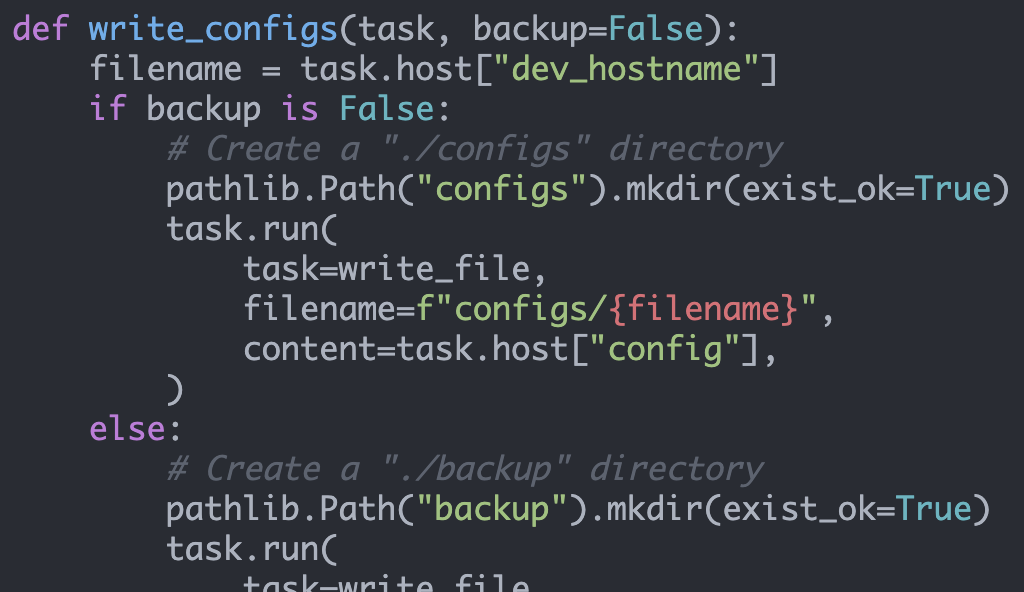

So what does all that mean in the context of our Jinja2 templating? Basically, that we need to create a subtask for our Jinja2 templating. This subtask code would be as follows:

from nornir.plugins.tasks.text import template_file

def render_configs(task):

"""

Nornir task to render device configurations from j2 templates.

Args:

task: nornir task object

"""

filename = task.host["j2_template_file"]

r = task.run(

task=template_file,

name="Base Template Configuration",

template=filename,

path="templates",

**task.host,

)

task.host["config"] = r.resultNote, inside the subtask we also call the .run() method. In other words, in our main program we call nr.run() on all of our nornir hosts (and pass in our subtask). This ultimately causes our subtask to be invoked in a child thread where we then access some host specific variables and call another function using .run (in our example the "template_file" function provided by nornir).

Next, the "j2_template_file" argument didn't exist in the "Ansible" version of the inventory files, so we simply need to add that for each of our device types (in this case, in our simple inventory file):

[SNIP]

[arista-eos:vars]

j2_template_file=arista_eos.j2

[SNIP]

[cisco-nxos:vars]

j2_template_file=cisco_nxos.j2One last minor adjustment is necessary in order to use our templates: nornir doesn't have an "inventory_hostname" attribute; instead we can access this (the same value) using "host.name". Consequently, we can simply adjust the j2 template files to reflect this (make sure you update the VDC section of the NX-OS template too!):

hostname {{ host.name }}At this point, we can use the nornir ".run()" method to execute our newly created subtask (which in turn, runs the templating task we require):

from nornir.plugins.functions.text import print_result

if __name__ == "__main__":

render_task = nr.run(task=render_configs)

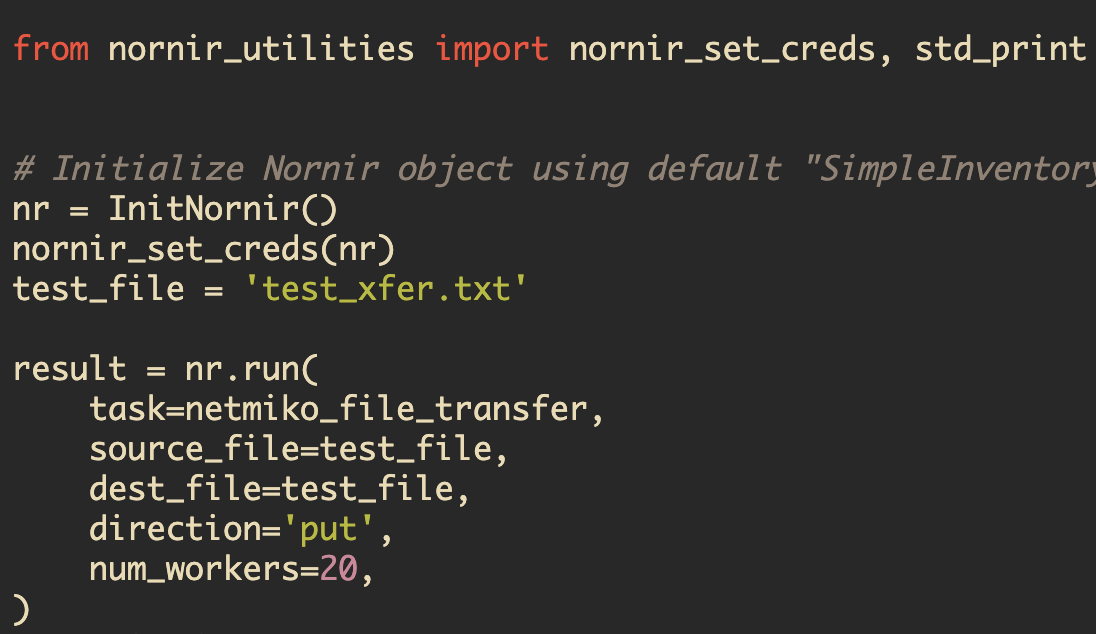

print_result(render_task)In this case (with only two hosts) things aren't wildly impressive, but behind the scenes, nornir will execute our task(s) concurrently (up to the number of workers specified with default value of 20 workers). We can also see this output thanks to the "print_result" function:

$ python3 deploy.py

render_configs**********************************************

* sea-eos-1 ** changed : False *****************************

vvvv render_configs ** changed : False vvvvvvvvvvvvvvvvvvvvv INFO

---- Base Template Configuration ** changed : False -------- INFO

transceiver qsfp default-mode 4x10G

!

hostname sea-eos-1

[SNIP]

!

ip routing

!

management api http-commands

no shutdown

protocol https port 444

^^^^ END render_configs ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

* sea-nxos-1 ** changed : False ****************************

vvvv render_configs ** changed : False vvvvvvvvvvvvvvvvvvvvv INFO

---- Base Template Configuration ** changed : False -------- INFO

!Command: Checkpoint cmd vdc 1

!Time: Wed Dec 5 01:12:16 2018

version 7.0(3)I7(5) Bios:version

hostname sea-nxos-1

[SNIP]

!#logging logfile messages 5 size 4194304

!#logging monitor

!#logging module

!#logging console

^^^^ END render_configs ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^Pretty slick, we can see the results for each host/task in a consumable output.

Not only, that, but with very minor tweaks we were able to leverage the existing Ansible inventory setup and use nornir to generate our configurations.

Nornir AggregatedResult & MultiResult

We now know that nornir provides a pretty-printable output from our task(s), but its worth taking additional time to actually understand what this result object is. This is a challenging issue for many folks when starting to learn nornir (myself very much included!).

Not to worry though, ipdb gives us an easy way of inspecting these objects.

Adding a simple set_trace() to our "deploy.py" file will allow us to inspect this results object (we can also disable the output printing):

ipdb> type(render_task)

<class 'nornir.core.task.AggregatedResult'>

ipdb> dir(render_task)

['__class__', '__contains__', '__delattr__', '__delitem__', '__dict__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattribute__', '__getitem__', '__gt__', '__hash__', '__init__', '__init_subclass__', '__iter__', '__le__', '__len__', '__lt__', '__module__', '__ne__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__setitem__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', 'clear', 'copy', 'failed', 'failed_hosts', 'fromkeys', 'get', 'items', 'keys', 'name', 'pop', 'popitem', 'raise_on_error', 'setdefault', 'update', 'values']

ipdb>From the methods/attributes available we can understand that the "AggregatedResult" object is an iterable object. Given that we had two devices in our inventory (the EOS device and the NX-OS device)--it would stand to reason that there would be two results in our AggregatedResult object, one for each device:

ipdb> type(render_task)

<class 'nornir.core.task.AggregatedResult'>

ipdb> len(render_task)

2

ipdb>Looking at the object itself we can learn even more:

ipdb> type(render_task)

<class 'nornir.core.task.AggregatedResult'>

ipdb> render_task

AggregatedResult (render_configs): {'sea-eos-1': MultiResult: [Result: "render_configs", Result: "Base Template Configuration"], 'sea-nxos-1': MultiResult: [Result: "render_configs", Result: "Base Template Configuration"]} So, AggregatedResult basically looks and feels like a dictionary where our hosts are the keys and some set of results are the corresponding value. We can work with that!

Now, we can access results for each of the hosts (as the keys) just like a "normal" dictionary. We can also see that the value for this key (sea-eos-1) is a "MultiResult" object--which we can assume contains the results of any task(s) that were performed for that host.

ipdb> render_task['sea-eos-1']

MultiResult: [Result: "render_configs", Result: "Base Template Configuration"]

ipdb> type(render_task['sea-eos-1'])

<class 'nornir.core.task.MultiResult'>

ipdb> render_task['sea-eos-1'][0]

Result: "render_configs"If we have only one task (to render our configurations), then you may be asking yourself why we have *two* results for each of our hosts.

Well, we technically have ran *two* tasks per host: the subtask that we called from the main() part of our script, and then the actual template_file task. What we've somewhat inadvertently done is created a group of tasks [docs]. Why would you want to group tasks? There could be a few reasons--you want to a bunch of similar tasks linked together (for example, if the sequencing matters), or in our case because we need to be able to access the individual host attributes.

Due to the fact that nornir does some magic for us (namely the task execution concurrency), there is a lot going on under the covers to create the threads that execute these tasks concurrently across each of the host(s) [docs]. The practical implication of this (in this example), and the reason for needing to group the tasks is that we cannot access the host-specific items until we are in the the host-specific thread. Or worded differently, we need to do things on a per-host basis (in our case grab variables for each host), but we are not in a per-host context until nornir creates and starts the child threads (so we need a way to access these child threads which is what the subtask does for us).

Now back to our "MultiResult" object—with our new understanding, it should now make more sense why we have the two results.

We can interrogate the results objects to learn what is contained in them:

ipdb> render_task['sea-eos-1'][0]

Result: "render_configs"

ipdb> dir(render_task['sea-eos-1'][0])

['__class__', '__delattr__', '__dict__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattribute__', '__gt__', '__hash__', '__init__', '__init_subclass__', '__le__', '__lt__', '__module__', '__ne__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', 'changed', 'diff', 'exception', 'failed', 'host', 'name', 'result', 'severity_level', 'stderr', 'stdout']

ipdb> render_task['sea-eos-1'][0].result

ipdb> render_task['sea-eos-1'][0].failed

FalseWith the first result in the "MultiResult" object, we don't get much information. This is because the first task of "render_configs" essentially just calls our function to do the actual rendering. The second result object is significantly more interesting:

ipdb> render_task['sea-eos-1'][1]

Result: "Base Template Configuration"

ipdb> render_task['sea-eos-1'][1].result

'transceiver qsfp default-mode 4x10G\n!\nhostname sea-eos-1 \nip domain-name sea.acme.io\nip name-server vrf default 8.8.8.8\nip name-server vrf default 8.8.4.4\n!\nntp server pool.ntp.org\n!\nspanning-tree mode mstp\n!\naaa authorization exec default local\n!\nno aaa root\n!\nusername admin privilege 15 role network-admin secret sha512 $6$nmRI5wZF9FGNAbjU$NB1obNreBYKGzFXeI2NSDK8RY3CUHCWyRJ/A.oHOPG1vWCpz.9ecSuyKaIO2/T2RTLDws.viZ1XwVL7dMnJDO.\nusername vagrant privilege 15 nopassword\nusername vagrant sshkey ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA6NF8iallvQVp22WDkTkyrtvp9eWW6A8YVr+kz4TjGYe7gHzIw+niNltGEFHzD8+v1I2YJ6oXevct1YeS0o9HZyN1Q9qgCgzUFtdOKLv6IedplqoPkcmF0aYet2PkEDo3MlTBckFXPITAMzF8dJSIFo9D8HfdOV0IAdx4O7PtixWKn5y2hMNG0zQPyUecp4pzC6kivAIhyfHilFR61RGL+GPXQ2MWZWFYbAGjyiYJnAmCP3NOTd0jMZEnDkbUvxhMmBYSdETk1rRgm+R4LOzFUGaHqHDLKLX+FIPKcF96hrucXzcWyLbIbEgE98OHlnVYCzRdK8jlqm8tehUc9c9WhQ==\n!\nclock timezone PST8PDT\n!\ninterface Management1\n ip address dhcp\n!\nip routing\n!\n\n\nmanagement api http-commands\n no shutdown\n protocol https port 444'

ipdb> render_task['sea-eos-1'][1].failed

False

ipdb>Nice! We can see the result is the actual rendered configuration, and of course we know that it did *not* fail.

In the next article, we'll deal with saving our generated configuration, and backing up the device configurations using NAPALM getters.