StackStorm + Nagios

Event-driven Automation (Part2)

This is part two of the Stackstorm + Nagios event-driven Automation series, you can find part one of this series here.

Reference code for this article is located here.

Introduction

In Part1 of this series we began work on automating device recover with StackStorm based on Nagios alerts. In this post, we'll complete the workflow of using Nagios and StackStorm to automatically recovery failed Arista vEOS devices.

Previously, we configured Nagios to send a webhook to StackStorm. This will notify StackStorm of any downed hosts and will include the hostname in the Nagios message. In response to this event, StackStorm is currently set up to run our simple "hello world" action.

Our task now is to build not just a simple action—but a "workflow", that orchestrates the checking, remediation, and verification (to confirm that the device is now back online) of any failed hosts. Let's jump right into it...

Workflows

Right now we trigger a single StackStorm action when a Nagios alert is received.

We could theoretically expand upon that action to incorporate all the steps necessary to recover our host. Unfortunately, if we did this, we would be building a big giant action that is amalgamation of multiple discrete tasks. For example, check if the host is up or down, log into the KVM host and perform the remediation, verify the host has been recovered, et cetera.

Instead of using this single large action, we can build a series of small actions and chain them together in a "workflow".

A "workflow" in StackStorm is a way to chain independent tasks together. And more than that—it provides a way to share state or pass information between tasks.

There are a few "flavors" of workflows in StackStorm. The recommendation from the StackStorm folks, however, is to use the "Orquesta" workflow, so that is exactly what we'll do!

Similar to other items that we've built in StackStorm, a workflow is defined in a YAML file. The workflow definitions are stored in the pack directory under "actions/workflows" so we'll go ahead and create this directory:

$ mkdir ~/super_cool_python/actions/workflowsThe actual YAML file that describes our workflow contains a few primary sections: metadata, "input", "tasks", and "output". The "inputs" section allows the workflow to accept arguments when it is executed. "Tasks" are of course the tasks that the workflow will execute, and "outputs" are any data that the workflow will return upon its completion.

Let's start the foundation of our workflow:

version: 1.0

description: remediate down devices

input:

- down_device

tasks:

check_device:

action: core.echo message="device down --> <% ctx(down_device) %>"

next:

- when: <% failed() %>

do: failure

publish:

- stdout: <% result().stdout %>

- stderr: <% result().stderr %>

- when: <% succeeded() %>

do: success

publish:

- stdout: <% result().stdout %>

- stderr: <% result().stderr %>

success:

action: core.echo message="yay, great success!"

failure:

action: core.echo message="uhoh something failed"

output:

- stdout: <% ctx(stdout) %>

- stderr: <% ctx(stderr) %>Another file that looks intimidating...and it is :-)

Let's walk through it.

Starting with some simple bits, we have a version and a description.

Next, we've got the "input" field:

input:

- down_deviceThis is a list of arguments that are passed into our workflow. Or worded differently, we are going to pass in a variable named "down_device" that will be usable in this workflow. Orquesta (the StackStorm workflow engine we are using) uses something it refers to as a "context" to keep track of shared variables in the workflow. You can access this context of shared variables using a function named "ctx()" (programmers like to be obscure so they used a three letter abbreviation for the term "context" here).

Consequently, you will see things similar to the following in the workflow definition:

<% ctx(down_device) %>The "<%" brackets indicate this is operating in a YAQL context. And I am confident that for a lot of you—the very next question will be "what the heck is YAQL"?

For reference see: YAQL Documentation

In our context here, we can think of YAQL as being a domain-specific language that is similar to Jinja2. We are going to use it to evaluate variables and to call functions (embedded inside of this YAML file). Note that StackStorm-Orquesta can alternatively use Jinja2 instead of YAQL. There is a good chance we will switch to Jinja2 moving forward since we are already familiar with these patterns.

Similar to Jinja2, YAQL uses special characters to indicate an expression (here the <% expressions %>). In our example, we have "ctx(down_device)" inside the YAQL expression:

<% ctx(down_device) %>So we are using YAQL to say we want to call a function. The function that we call is the special "ctx" function and the thing we are retrieving from it is the "down_device" variable which we passed in as an argument to this workflow.

Whew that was challenging...

Now that we have a brief understanding of YAQL, ctx, and input variables, let's look at the tasks section.

tasks:

check_device:

action: core.echo message="device down --> <% ctx(down_device) %>"

next:

- when: <% failed() %>

do: failure

publish:

- stdout: <% result().stdout %>

- stderr: <% result().stderr %>

- when: <% succeeded() %>

do: success

publish:

- stdout: <% result().stdout %>

- stderr: <% result().stderr %>

success:

action: core.echo message="yay, great success!"

failure:

action: core.echo message="uhoh something failed"We see here that we have three different tasks: "check_device", "success", and "failure".

In YAML, the "tasks" section is a dictionary with the keys: check_device, success, and failure. A reasonable question that then arises is what is the order of execution of these tasks? Note, the designers of Orquesta did not choose a list here...so that makes me think that the task execution is not simply linear (i.e. check_device, then success, then failure).

Reading in the StackStorm + Orquesta documentation describes this in a hard to follow manner using the terms "directed graph" and "edges". I say hard to follow as this is definitely a case where adding a diagram would have significantly improved the documentation.

The long and short of task execution (for our workflow) is that check_device will be the entry point for task execution as it is not downstream from any other tasks.

Or worded differently, check_device can potentially call both the "failure" task and the "success" task inside of its "next" section. Consequently, both the "failure" task and the "success" task are downstream from "check_device" and hence they will not be executed first.

"check_device" (as of right now) executes the "core.echo" action that we've seen previously.

check_device:

action: core.echo message="device down --> <% ctx(down_device) %>"This action also passes a "message" string notifying us which device is "down" (this uses our down_device input variable which we will ultimately get from Nagios).

Within this task, we have a "next" field which tells StackStorm what to do next.

next:

- when: <% failed() %>

do: failure

publish:

- stdout: <% result().stdout %>

- stderr: <% result().stderr %>

- when: <% succeeded() %>

do: success

publish:

- stdout: <% result().stdout %>

- stderr: <% result().stderr %>The failed() and succeeded() are functions that we call inside our YAQL; these functions will report on the status of the action that we executed. These function calls are coupled to a "when" statement which is a conditional (just like ansible-YAML we have programming constructs embedded here).

The "do" statement specifies which task to call next (after completion of this task). So either the "failure" task or the "success" task can be called. The "publish" action updates the internal "context" with the information that we specify. Once again embedded inside the publish statement is a YAQL statement where we call a function. This time we call the "result" function to retrieve the result of the action execution.

The success/failure tasks are also simply "core.echo" tasks at this point.

success:

action: core.echo message="yay, great success!"

failure:

action: core.echo message="uhoh something failed"Lastly, we output any standard out and standard error we "published" in the previously executed tasks. In other words, we temporarily gather "stdout" and "stderr" into our context (ctx) and then eventually output it in the "output" task:

output:

- stdout: <% ctx(stdout) %>

- stderr: <% ctx(stderr) %>The workflow by itself doesn't "do" much. It still needs to be executed with an action; consequently, we can create a very simple action YAML file as we've done before. The only real difference here is that the "entry_point" is our workflow instead of a Python script.

---

name: network_recovery

pack: super_cool_python

enabled: true

description: remediate down devices

runner_type: orquesta

entry_point: workflows/network_recovery.yaml

parameters:

# Note our down_device input argument is here

down_device:

type: string

description: name of host that is down

required: True

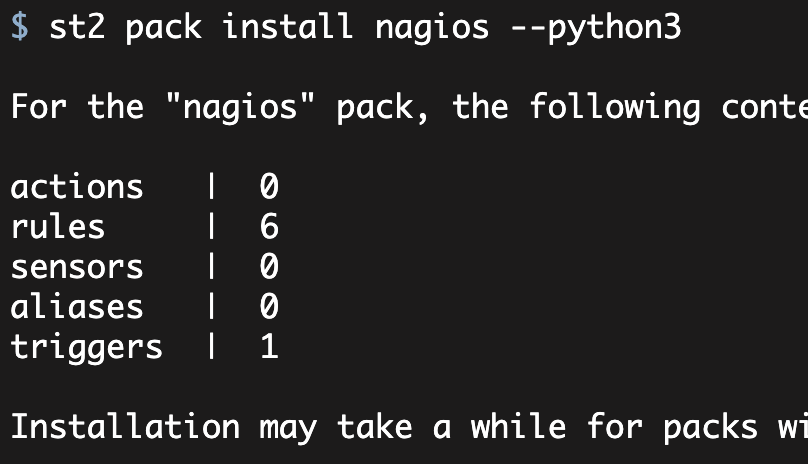

position: 0Once more we need to update git and re-install our pack:

cd ~/super_cool_python/

git add actions/workflows

git add actions/network_recovery.yaml

git commit -m "adding first workflow!"

st2 pack install ~/super_cool_python/ --python3Now we can test executing our workflow

Note, we manually pass in an argument for "down_device" as we haven't (yet) wired in the Nagios trigger.

$ st2 action execute super_cool_python.network_recovery \

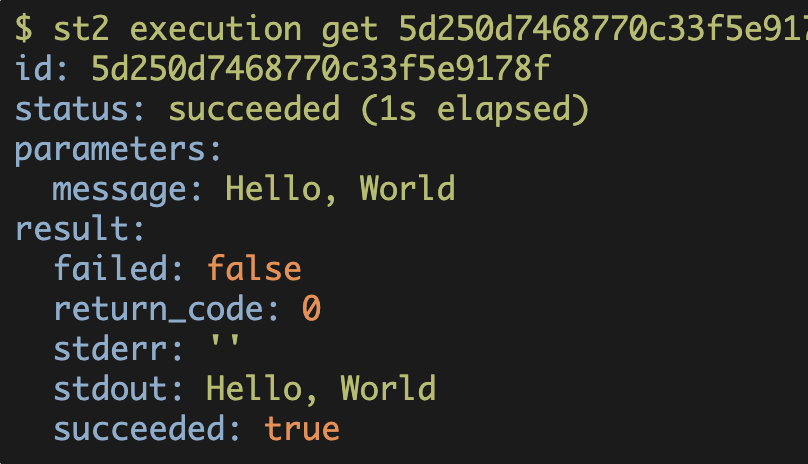

down_device="sad_router_1"And as usual we can see the execution:

$ st2 execution get 5d49b49e68770c7af332535a

id: 5d49b49e68770c7af332535a

action.ref: super_cool_python.network_recovery

parameters:

down_device: sad_router_1

status: succeeded (1s elapsed)

start_timestamp: Tue, 06 Aug 2019 17:10:54 UTC

end_timestamp: Tue, 06 Aug 2019 17:10:55 UTC

result:

output:

stderr: ''

stdout: device down --> sad_router_1

+-----+----------------+--------------+-----------+--------------------+

| id | status | task | action | start_timestamp |

+-----+------------ ---+--------------+-----------+--------------------+

| 3d4 | succeeded (1s) | check_device | core.echo | Tue, 06 Aug 2019 |

| | | | | 17:10:54 UTC |

| 3d7 | succeeded (0s) | success | core.echo | Tue, 06 Aug 2019 |

| | | | | 17:10:55 UTC |

+-----+----------------+--------------+-----------+--------------------+Note, the "id" field above has been abbreviated.

We get some different output when viewing the execution (as compared to previous executions) because this is a workflow. We can then drill into the execution steps that the workflow ran:

$ st2 execution get 5d49b49e68770c59670fe3d4

id: 5d49b49e68770c59670fe3d4

status: succeeded (1s elapsed)

parameters:

message: device down --> sad_router_1

result:

failed: false

return_code: 0

stderr: ''

stdout: device down --> sad_router_1

succeeded: true

$ st2 execution get 5d49b49f68770c59670fe3d7

id: 5d49b49f68770c59670fe3d7

status: succeeded (0s elapsed)

parameters:

message: yay, great success!

result:

failed: false

return_code: 0

stderr: ''

stdout: yay, great success!

succeeded: trueHost Recovery; Nornir + Netmiko

As this post is primarily about StackStorm orchestration, we are going to gloss over the actual Nornir/Netmiko script (in other words, the real recovery script that StackStorm would execute to remediate a failed Arista switch).

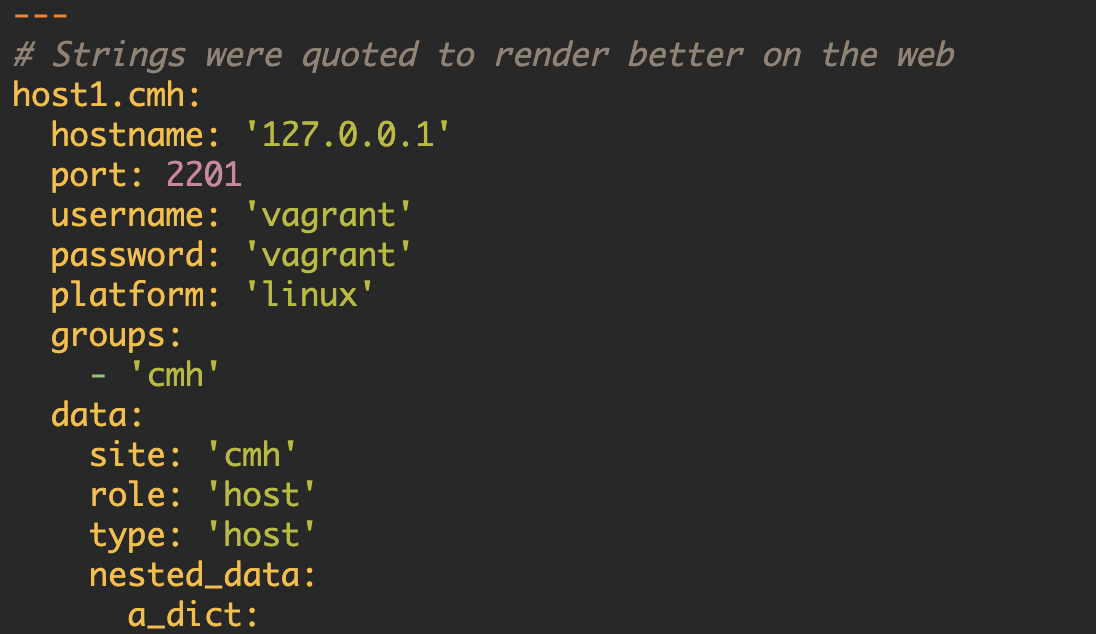

So to summarize the Nornir and Netmiko initial setup:

- I've created a Nornir inventory and config.yaml file in a new directory inside the pack.

- I've also included the appropriate credentials/SSH keys (in "normal" operations, you would want to store this in a secure vault or some other secure manner)

Next, I've created two actions which execute Nornir+Netmiko Python scripts: the first script attempts to connect to the "down" device and verify that the device is actually down and unreachable (this part of the script technically uses both Netmiko and NAPALM).

The second action connects to the KVM server that the vEOS devices are running on. It then telnets from the KVM server to the localhost console of the Arista device (the Arista vEOS switch is listening on localhost and some high TCP port). This part of the script is all Nornir-Netmiko. In order to simplify the recovery process, we have stored a working baseline configuration in the flash of each of the Arista devices. We can then restore this working baseline configuration into the running configuration (which should recover the device).

Lastly I've added a "requirements.txt" file to the pack that lists "nornir" as the only requirement. The Nornir library includes both Netmiko and NAPALM as dependencies so we don't need to list those separately.

With those bits out of the way we can piece together the final parts of the workflow.

Updating Actions & the Workflow

With our glossed over Nornir + Netmiko bits sorted out, we can now focus on updating our workflow. The general flow is (context here is...a Nagios alert has arrived to StackStorm that will trigger this workflow):

- Verify the device is up or down:

- If this fails: continue to try to recover (device is down)

- If this succeeds: finish and note that device was already up (device is up)

- Recover the device:

- If this fails: finish and note the failure

- If this succeeds: confirm the device is up and reachable

- Confirm the device is up:

- If this fails: finish and note that the recovery effort was allegedly successful but the device is still not reachable

- If this succeeds: finish and note that the process was completed successfully

We can accomplish this using the same type of pattern as our previous workflow:

tasks:

check_device:

action: super_cool_python.network_device_check down_device=<% ctx(down_device) %>

next:

- when: <% failed() %>

do: recover_device

publish:

- stdout: <% result().stdout %>

- stderr: <% result().stderr %>

- when: <% succeeded() %>

do: finish_device_not_down

publish:

- stdout: <% result().stdout %>

- stderr: <% result().stderr %>

recover_device:

action: super_cool_python.network_device_recovery down_device=<% ctx(down_device) %>

next:

- when: <% failed() %>

do: finish_failure

publish:

- stdout: <% result().stdout %>

- stderr: <% result().stderr %>

- when: <% succeeded() %>

do: confirm_device

publish:

- stdout: <% result().stdout %>

- stderr: <% result().stderr %>

confirm_device:

action: super_cool_python.network_device_check down_device=<% ctx(down_device) %>

next:

- when: <% failed() %>

do: finish_validation_failure

publish:

- stdout: <% result().stdout %>

- stderr: <% result().stderr %>

- when: <% succeeded() %>

do: finish_success

publish:

- stdout: <% result().stdout %>

- stderr: <% result().stderr %>

finish_device_not_down:

action: core.echo message="device <% ctx(down_device) %> was not down"

finish_failure:

action: core.echo message="failed trying to recover device <% ctx(down_device) %>"

finish_validation_failure:

action: core.echo message="failed to validate device <% ctx(down_device) %> recovered"

finish_success:

action: core.echo message="recovered device <% ctx(down_device) %> successfully!"In the above "tasks", we created our four "finish" criteria with appropriate echo messages (in other words, messages that indicate what happened). We've also modified our tasks to execute the Nornir-Netmiko scripts.

After updating the pack, this workflow could then be manually executed, but that's not what we want—we want this to be automatic!

Updating the Rules

Now we need to update our rule to execute our freshly updated workflow.

This is a simple matter of changing the action in the rule, BUT we also need to add in the name of the failed host as well! In other words, we need to retrieve the name of the failed host from the Nagios HTTP Post.

action:

ref: super_cool_python.network_recovery

description: try to auto remediate network down device

parameters:

down_device: "{{ trigger.host }}"Here we can see that we are able to access the "host" attribute of the trigger (the Nagios trigger), and simply pass that to the workflow.

ST2 in Action

To put our workflow to the test the "arista1" device was brought offline. Sure enough, when the Nagios alarm reached the "HARD" state, then a message was sent to StackStorm. This event caused StackStorm to executed the workflow:

$ st2 execution get 5d49d79d68770c546de40ff8

id: 5d49d79d68770c546de40ff8

action.ref: super_cool_python.network_recovery

parameters:

down_device: arista1

status: succeeded (244s elapsed)

start_timestamp: Tue, 06 Aug 2019 19:40:13 UTC

end_timestamp: Tue, 06 Aug 2019 19:44:17 UTC

result:

output:

stderr: ''

stdout: 'Checking to see if device arista1 is alive...

Trying netmiko connection to device arista1...

Successfully opened netmiko connection to device arista1...

Trying napalm connection to device arista1...

Successfully opened napalm connection to device arista1...

Device arista1 is alive?: True

'

+----+--------------+--------------+----------------------+---------------+

|id |status |task | action |start_timestamp|

+----+--------------+--------------+----------------------+---------------+

|e3e6|failed(162s) |check_device | super_cool_python. |Tue, 06Aug2019 |

| | | | network_device_check |19:40:13 UTC |

|e3e9|succeeded(56s)|recover_device| super_cool_python.net|Tue, 06Aug2019 |

| | | | work_device_recovery |19:42:55 UTC |

|e3ec|succeeded(25s)|confirm_device| super_cool_python |Tue, 06Aug2019 |

| | | | network_device_check |19:43:51 UTC |

|e3ef|succeeded(0s) |finish_success| core.echo |Tue, 06Aug2019 |

| | | | |19:44:16 UTC |

+----+--------------+--------------+----------------------+---------------+Note, IDs and some other fields had minor changes so that the output would be readable on the web.

We can see that the "check_device" task failed which caused StackStorm to then execute the recovery task.

Success of the recovery task caused StackStorm to execute the confirmation task, which ultimately ended in success and triggered the "finish_success" task.

Now we should never need to manually recover one of the Arista devices in our environment! Well, never say never, but we should have fewer manual recovery events.

Conclusion

This setup is of course pretty tailored to our environment and our use cases.

It should be clear, however, that StackStorm (and event-based automation) can be very useful!